도찐개찐

[머신러닝] 17. 부스팅(boosting) 본문

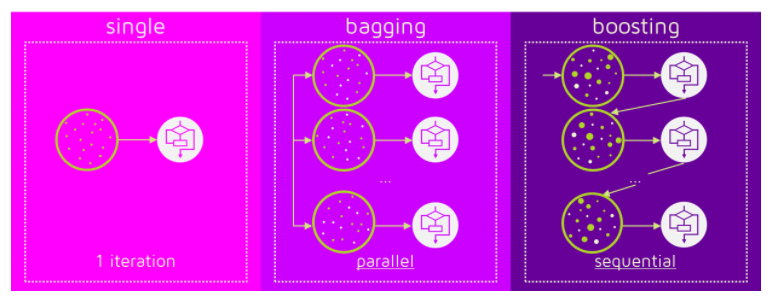

부스팅boosting

- 배깅처럼 무작위로 표본을 추출해서 분석하는 것보다

- 약간의 가능성이 높은 규칙들을 결합시켜

- 보다 정확한 예측모델을 만들어 내는 것을 의미

- 즉, 약한 모델 여러개를 결합시켜 강한 모델을 만들어 냄

- 배깅은 여러 분류기를 병렬적으로 연결해서 각 분류기로 부터 얻어진 결과를 한번에 모두 고려

- => 각 분류기가 학습시 상호 영향을 주지 않음

- 부스팅은 순차적으로 연결해서 전 단계 분류기의 결과가 다음 단계 분류기의 학습과 결과에 영향을 미침

- 부스팅 기법 종류

- AdaBoost : 가중치 기반 부스팅 기법

- Gradientboost : 잔여오차 기반 부스팅 기법

- XGBoost : GB 개량 부스팅 기법 (추천!)

- LightGBM : XGB 개량 부스팅 기법 (추천!)

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as snsfrom sklearn.model_selection import train_test_split

from sklearn.ensemble import AdaBoostClassifier

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.metrics import confusion_matrix

from sklearn.metrics import accuracy_score, f1_score

from sklearn.metrics import recall_score, precision_score

from sklearn.metrics import roc_curve, roc_auc_score# !pip install xgboost lightgbmfrom xgboost import XGBClassifier

from lightgbm import LGBMClassifierfrom sklearn.datasets import make_blobs

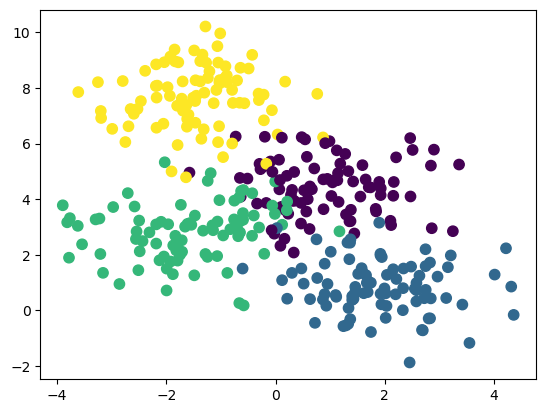

X, y = make_blobs(n_samples=350, centers=4,

random_state=0, cluster_std=1.0)

plt.scatter(X[:,0], X[:,1], c=y, s=55)

plt.show()

X_train, X_test, y_train, y_test = \

train_test_split(X, y, train_size=0.7,

stratify=y, random_state=2211211235)AdaBoosting 분석 실행

# learning_rate : 학습률

# 가중치 부여 알고리즘

# SAMME.R : soft votting 방식의 가중치 부여 (확률)

# SAMME : hard votting 방식의 가중치 부여 (값)

from sklearn.tree import DecisionTreeClassifier

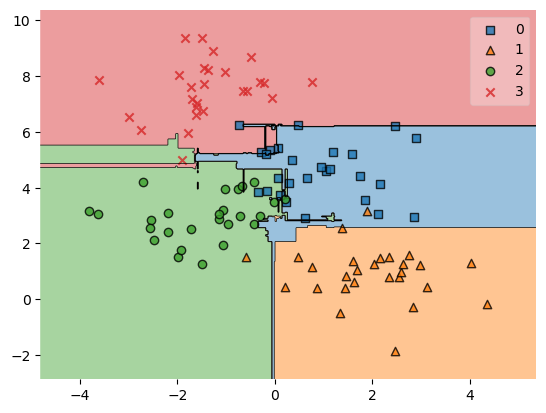

adclf = AdaBoostClassifier(DecisionTreeClassifier(max_depth=4),

n_estimators=100, learning_rate=0.5, algorithm='SAMME.R')

adclf.fit(X_train, y_train)AdaBoostClassifier(base_estimator=DecisionTreeClassifier(max_depth=4), learning_rate=0.5, n_estimators=100)</pre><b>In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. <br />On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.</b></div><div class="sk-container" hidden><div class="sk-item sk-dashed-wrapped"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-32" type="checkbox" ><label for="sk-estimator-id-32" class="sk-toggleable__label sk-toggleable__label-arrow">AdaBoostClassifier</label><div class="sk-toggleable__content"><pre>AdaBoostClassifier(base_estimator=DecisionTreeClassifier(max_depth=4),

learning_rate=0.5, n_estimators=100)</pre></div></div></div><div class="sk-parallel"><div class="sk-parallel-item"><div class="sk-item"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-33" type="checkbox" ><label for="sk-estimator-id-33" class="sk-toggleable__label sk-toggleable__label-arrow">base_estimator: DecisionTreeClassifier</label><div class="sk-toggleable__content"><pre>DecisionTreeClassifier(max_depth=4)</pre></div></div></div><div class="sk-serial"><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-34" type="checkbox" ><label for="sk-estimator-id-34" class="sk-toggleable__label sk-toggleable__label-arrow">DecisionTreeClassifier</label><div class="sk-toggleable__content"><pre>DecisionTreeClassifier(max_depth=4)</pre></div></div></div></div></div></div></div></div></div></div>adclf.score(X_train, y_train)1.0pred = adclf.predict(X_test)

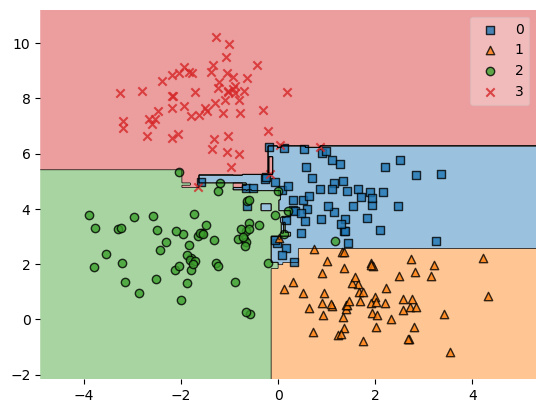

accuracy_score(y_test, pred)0.8867924528301887from mlxtend.plotting import plot_decision_regions

plot_decision_regions(X_test, y_test, adclf)

GradientBoosting 분석 실행

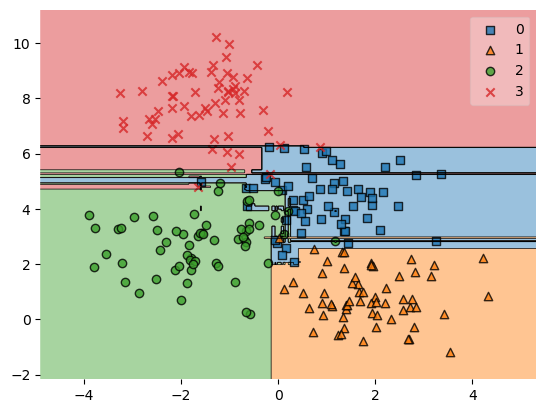

gdclf = GradientBoostingClassifier(max_depth=5, n_estimators=100)

gdclf.fit(X_train, y_train)GradientBoostingClassifier(max_depth=5)On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

GradientBoostingClassifier(max_depth=5)gdclf.score(X_train, y_train)1.0pred = gdclf.predict(X_test)

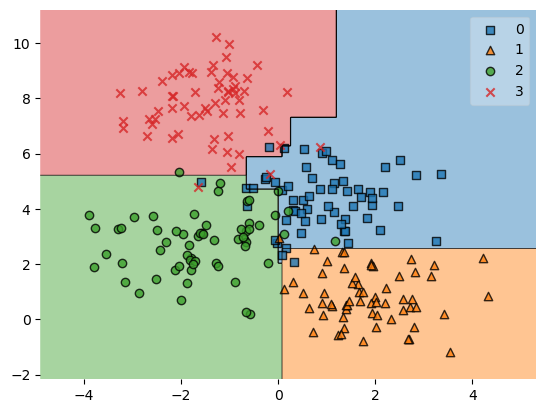

accuracy_score(y_test, pred)0.8773584905660378from mlxtend.plotting import plot_decision_regions

plot_decision_regions(X_train, y_train, gdclf)

plt.show()/opt/miniconda3/lib/python3.9/site-packages/mlxtend/plotting/decision_regions.py:300: UserWarning: You passed a edgecolor/edgecolors ('black') for an unfilled marker ('x'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax.scatter(

XGBoosting 분석 실행

- 캐글 데이터분석 경진대회 우승자들이 자주 사용했던 분석 기법

- GBM 분석기법 대비 속도와 성능을 향상시킴

- XGBoost 핵심 라이브러리는 C/C++로 작성됨

- 따라서, sklearn에서 연동하려면 래퍼클래스wrapper class를 사용해야 함

- xgboost.readthedocs.io

- 설치하기 (2020.01.31 기준 v0.90)

- pip3 install xgboost

import xgboost

xgboost.__version__'1.7.1'# objective : 분류 목적 지정

# binary:logistic : 이항분류

# multi:softmax : 다항분류

xgclf = XGBClassifier(n_estimators=10, max_depth=4, learning_rate=0.5, objective='multi:softmax')

xgclf.fit(X_train, y_train)XGBClassifier(base_score=0.5, booster='gbtree', callbacks=None, colsample_bylevel=1, colsample_bynode=1, colsample_bytree=1,

early_stopping_rounds=None, enable_categorical=False,

eval_metric=None, feature_types=None, gamma=0, gpu_id=-1,

grow_policy='depthwise', importance_type=None,

interaction_constraints='', learning_rate=0.5, max_bin=256,

max_cat_threshold=64, max_cat_to_onehot=4, max_delta_step=0,

max_depth=4, max_leaves=0, min_child_weight=1, missing=nan,

monotone_constraints='()', n_estimators=10, n_jobs=0,

num_parallel_tree=1, objective='multi:softmax', predictor='auto', ...)</pre><b>In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. <br />On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.</b></div><div class="sk-container" hidden><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-36" type="checkbox" checked><label for="sk-estimator-id-36" class="sk-toggleable__label sk-toggleable__label-arrow">XGBClassifier</label><div class="sk-toggleable__content"><pre>XGBClassifier(base_score=0.5, booster='gbtree', callbacks=None,

colsample_bylevel=1, colsample_bynode=1, colsample_bytree=1,

early_stopping_rounds=None, enable_categorical=False,

eval_metric=None, feature_types=None, gamma=0, gpu_id=-1,

grow_policy='depthwise', importance_type=None,

interaction_constraints='', learning_rate=0.5, max_bin=256,

max_cat_threshold=64, max_cat_to_onehot=4, max_delta_step=0,

max_depth=4, max_leaves=0, min_child_weight=1, missing=nan,

monotone_constraints='()', n_estimators=10, n_jobs=0,

num_parallel_tree=1, objective='multi:softmax', predictor='auto', ...)</pre></div></div></div></div></div>xgclf.score(X_train, y_train)0.9918032786885246pred = xgclf.predict(X_test)

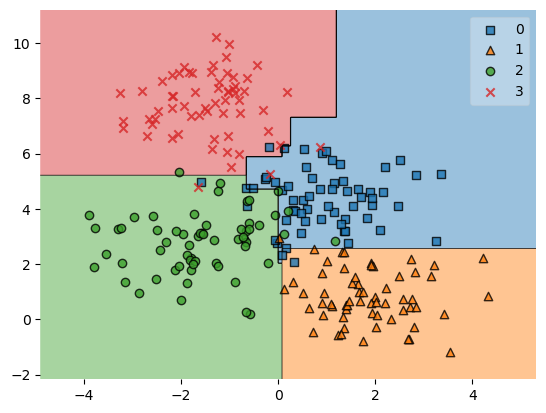

accuracy_score(y_test, pred)0.9245283018867925plot_decision_regions(X_train, y_train, xgclf)

plt.show()/opt/miniconda3/lib/python3.9/site-packages/mlxtend/plotting/decision_regions.py:300: UserWarning: You passed a edgecolor/edgecolors ('black') for an unfilled marker ('x'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax.scatter(

LightGBoosting 분석 실행

- 부스팅 계열 분석 알고리즘에서 가장 각광을 받고 있음

- XGB는 다른 알고리즘보다 성능이 좋지만 느리고 메모리를 많이 사용한다는 단점 존재

- 그에 비해 LGB는 속도도 빠르고 메모리도 적게 먹음

- 즉, XGB의 장점은 수용하고 단점은 보완한 알고리즘임

- lightgbm.readthedocs.io

- 설치하기 (2020.01.31 기준 v2.3.1)

- pip install lightgbm

import lightgbm

lightgbm.__version__'3.3.3'# objective: 분류목적(regression, binary, multiclass

lxgclf = LGBMClassifier(n_estimators=10, learning_rate=0.1, objective='multiclass', max_depth=10)

lxgclf.fit(X_train, y_train)LGBMClassifier(max_depth=10, n_estimators=10, objective='multiclass')On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LGBMClassifier(max_depth=10, n_estimators=10, objective='multiclass')lxgclf.score(X_train, y_train)0.9385245901639344pred = lxgclf.predict(X_test)

accuracy_score(y_test, pred)0.9339622641509434plot_decision_regions(X_train, y_train, lxgclf)

plt.show()/opt/miniconda3/lib/python3.9/site-packages/mlxtend/plotting/decision_regions.py:300: UserWarning: You passed a edgecolor/edgecolors ('black') for an unfilled marker ('x'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax.scatter(

CatBoost

- categorical boosting

- 범주형 변수들로 구성된 데이터 분석에 대한 예측에 강점을 보이는 부스팅 모델

- catboost.ai

!pip install catboostCollecting catboost

Downloading catboost-1.1.1-cp39-none-manylinux1_x86_64.whl (76.6 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m76.6/76.6 MB[0m [31m4.8 MB/s[0m eta [36m0:00:00[0m:00:01[0m00:01[0m

[?25hRequirement already satisfied: pandas>=0.24.0 in /home/bigdata/.py39/lib/python3.9/site-packages (from catboost) (1.5.1)

Collecting plotly

Downloading plotly-5.11.0-py2.py3-none-any.whl (15.3 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m15.3/15.3 MB[0m [31m56.8 MB/s[0m eta [36m0:00:00[0m00:01[0m00:01[0m

[?25hRequirement already satisfied: scipy in /home/bigdata/.py39/lib/python3.9/site-packages (from catboost) (1.9.3)

Requirement already satisfied: numpy>=1.16.0 in /home/bigdata/.py39/lib/python3.9/site-packages (from catboost) (1.23.4)

Collecting graphviz

Downloading graphviz-0.20.1-py3-none-any.whl (47 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m47.0/47.0 kB[0m [31m11.2 MB/s[0m eta [36m0:00:00[0m

[?25hRequirement already satisfied: matplotlib in /home/bigdata/.py39/lib/python3.9/site-packages (from catboost) (3.6.2)

Requirement already satisfied: six in /home/bigdata/.py39/lib/python3.9/site-packages (from catboost) (1.16.0)

Requirement already satisfied: pytz>=2020.1 in /home/bigdata/.py39/lib/python3.9/site-packages (from pandas>=0.24.0->catboost) (2022.6)

Requirement already satisfied: python-dateutil>=2.8.1 in /home/bigdata/.py39/lib/python3.9/site-packages (from pandas>=0.24.0->catboost) (2.8.2)

Requirement already satisfied: fonttools>=4.22.0 in /home/bigdata/.py39/lib/python3.9/site-packages (from matplotlib->catboost) (4.38.0)

Requirement already satisfied: pyparsing>=2.2.1 in /home/bigdata/.py39/lib/python3.9/site-packages (from matplotlib->catboost) (3.0.9)

Requirement already satisfied: kiwisolver>=1.0.1 in /home/bigdata/.py39/lib/python3.9/site-packages (from matplotlib->catboost) (1.4.4)

Requirement already satisfied: pillow>=6.2.0 in /home/bigdata/.py39/lib/python3.9/site-packages (from matplotlib->catboost) (9.3.0)

Requirement already satisfied: cycler>=0.10 in /home/bigdata/.py39/lib/python3.9/site-packages (from matplotlib->catboost) (0.11.0)

Requirement already satisfied: contourpy>=1.0.1 in /home/bigdata/.py39/lib/python3.9/site-packages (from matplotlib->catboost) (1.0.6)

Requirement already satisfied: packaging>=20.0 in /home/bigdata/.py39/lib/python3.9/site-packages (from matplotlib->catboost) (21.3)

Collecting tenacity>=6.2.0

Downloading tenacity-8.1.0-py3-none-any.whl (23 kB)

Installing collected packages: tenacity, graphviz, plotly, catboost

Successfully installed catboost-1.1.1 graphviz-0.20.1 plotly-5.11.0 tenacity-8.1.0from catboost import CatBoostClassifier# iterations : 훈련시 생성 할 모델 수 - n_estimators 와 동일

# cat_features : 범주형 변수 갯수

# plot : 학습과정을 그래프로 시각화

cbclf = CatBoostClassifier(n_estimators=50, learning_rate=0.1)

cbclf.fit(X_train, y_train)0: learn: 1.2306456 total: 2.18ms remaining: 107ms

1: learn: 1.1181291 total: 4.18ms remaining: 100ms

2: learn: 1.0265855 total: 6.45ms remaining: 101ms

3: learn: 0.9418285 total: 8.36ms remaining: 96.2ms

4: learn: 0.8618214 total: 10.4ms remaining: 93.8ms

5: learn: 0.7984379 total: 12.3ms remaining: 90.3ms

6: learn: 0.7388684 total: 14.3ms remaining: 87.7ms

7: learn: 0.6849995 total: 16.3ms remaining: 85.5ms

8: learn: 0.6402571 total: 18.2ms remaining: 83.1ms

9: learn: 0.6010076 total: 20.1ms remaining: 80.5ms

10: learn: 0.5644029 total: 23.6ms remaining: 83.8ms

11: learn: 0.5296757 total: 25.7ms remaining: 81.3ms

12: learn: 0.5010750 total: 27.6ms remaining: 78.6ms

13: learn: 0.4727191 total: 29.6ms remaining: 76.1ms

14: learn: 0.4477545 total: 31.7ms remaining: 73.9ms

15: learn: 0.4287130 total: 33.7ms remaining: 71.6ms

16: learn: 0.4106916 total: 35.6ms remaining: 69.2ms

17: learn: 0.3926239 total: 37.6ms remaining: 66.8ms

18: learn: 0.3800191 total: 39.5ms remaining: 64.5ms

19: learn: 0.3639425 total: 41.4ms remaining: 62.2ms

20: learn: 0.3519795 total: 43.5ms remaining: 60.1ms

21: learn: 0.3394025 total: 45.4ms remaining: 57.8ms

22: learn: 0.3291458 total: 47.4ms remaining: 55.6ms

23: learn: 0.3194941 total: 49.3ms remaining: 53.4ms

24: learn: 0.3106122 total: 51.2ms remaining: 51.2ms

25: learn: 0.3038759 total: 53.1ms remaining: 49.1ms

26: learn: 0.2956006 total: 55.1ms remaining: 46.9ms

27: learn: 0.2866089 total: 57ms remaining: 44.8ms

28: learn: 0.2786826 total: 59.1ms remaining: 42.8ms

29: learn: 0.2720653 total: 61ms remaining: 40.7ms

30: learn: 0.2659774 total: 62.9ms remaining: 38.6ms

31: learn: 0.2603136 total: 64.9ms remaining: 36.5ms

32: learn: 0.2556495 total: 66.8ms remaining: 34.4ms

33: learn: 0.2499914 total: 68.8ms remaining: 32.4ms

34: learn: 0.2440158 total: 70.6ms remaining: 30.3ms

35: learn: 0.2392688 total: 72.6ms remaining: 28.2ms

36: learn: 0.2350298 total: 74.5ms remaining: 26.2ms

37: learn: 0.2305597 total: 76.4ms remaining: 24.1ms

38: learn: 0.2272362 total: 78.4ms remaining: 22.1ms

39: learn: 0.2238696 total: 80.3ms remaining: 20.1ms

40: learn: 0.2204815 total: 82.1ms remaining: 18ms

41: learn: 0.2175074 total: 84.1ms remaining: 16ms

42: learn: 0.2134712 total: 85.9ms remaining: 14ms

43: learn: 0.2102320 total: 87.9ms remaining: 12ms

44: learn: 0.2070448 total: 89.8ms remaining: 9.98ms

45: learn: 0.2037692 total: 91.7ms remaining: 7.97ms

46: learn: 0.2010908 total: 93.7ms remaining: 5.98ms

47: learn: 0.1980816 total: 95.6ms remaining: 3.98ms

48: learn: 0.1957117 total: 97.5ms remaining: 1.99ms

49: learn: 0.1926525 total: 99.4ms remaining: 0us

<catboost.core.CatBoostClassifier at 0x7f2c74909910>cbclf.score(X_train, y_train)0.9426229508196722pred = cbclf.predict(X_test)

accuracy_score(y_test, pred)0.9339622641509434plot_decision_regions(X_train, y_train, lxgclf)

plt.show()/opt/miniconda3/lib/python3.9/site-packages/mlxtend/plotting/decision_regions.py:300: UserWarning: You passed a edgecolor/edgecolors ('black') for an unfilled marker ('x'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax.scatter(

728x90

'PYTHON > 데이터분석' 카테고리의 다른 글

| [머신러닝-비지도] 02. k-means (0) | 2023.01.04 |

|---|---|

| [머신러닝-비지도] 01. 군집분석 (0) | 2023.01.04 |

| [머신러닝] 16. 랜덤 포레스트 (0) | 2023.01.03 |

| [머신러닝] 15. 배깅(bagging) (0) | 2023.01.03 |

| [머신러닝] 14. 앙상블 (0) | 2023.01.03 |

Comments